Securing a $100M Lightning node

Published June 13, 2023

Summary

TL;DR: ACINQ is one of the main developers and operators of the Lightning Network, an open payment network built on top of Bitcoin. Because private keys need to be “hot” (always online), operating a Lightning Network node poses serious security challenges.

After years of R&D on how to secure our Lightning node, we have settled on a combination of AWS Nitro Enclaves (an Isolated Compute Environment) and Ledger Nano (a signing device with a trusted display). This setup offers what we believe is the best trade-off between security, flexibility, performance, and operational complexity for running a professional Lightning node.

1. Context

The Lightning Network is a fast, scalable, trust-minimized, open network of nodes that relay payments. By nature, these nodes are reachable from the Internet, process real-time transactions, and manage private keys that control the operator's funds. A Lightning node is essentially a hot wallet, a class of software known to be a prime target for hackers.

Depending on the activity of the node operator, the funds at risk are not necessarily owned by the operator. In the case of traditional exchanges or custodial wallets, it will be the user's funds. In our case (routing node and self-custodial wallet provider), it's our own funds that are at risk.

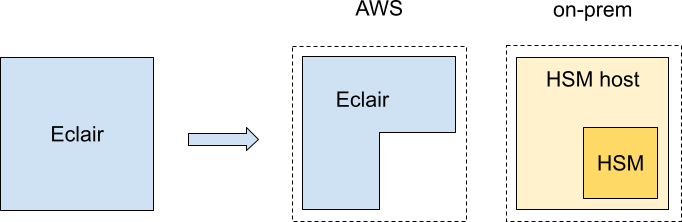

We have developed an open-source Lightning implementation, Eclair, specifically designed for large workloads. Eclair is written in Scala and runs on the JVM. Based on the actor model, Eclair can easily scale to a large number of payment channels, with a high transaction volume. We detailed the architecture of Eclair in a previous blog post.

Eclair is what powers the ACINQ node, which currently manages hundreds of BTC and tens of thousands of channels. We expect to see both those numbers and the level of risk grow dramatically in the coming years. We knew from the start that security would be a critical requirement for our success, which is why we invested early in researching the topic — about 4 years ago.

We spent the first 3 years writing a full Lightning implementation for an off-the-shelf HSM (Hardware Security Module). It worked and we were close to releasing it. But then we came across AWS Nitro Enclaves and found out that it was a superior solution for our use case, in almost all aspects. So we changed course, designed an original solution that also involves a Ledger Nano for some authentication operations, and are now happily running Eclair on Nitro Enclaves in production.

2. Scope and Assumptions

What are we trying to protect against?

The following are in-scope :

- compromised production servers (including root access)

- compromised development and administration pcs/laptops

- compromised cloud provider administrators and operators

The following are out-of-scope :

- LN protocol vulnerabilities

- Eclair vulnerabilities

- Bitcoin eclipse attacks

- Denial-of-Service attacks

3. A few words about Lightning

Lightning is a network of payment channels, where any node can pay any other node they can find a path to.

Payment channels are anchored in the Bitcoin blockchain, but payments made through a channel do not need to be recorded on-chain, hence the name “off-chain payments”. This is what allows Lightning to scale to a virtually unlimited throughput.

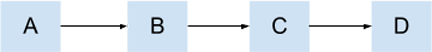

When A pays D through nodes B and C, what actually happens is that A pays B, B pays C, and C pays D, and by design the Lightning protocol ensures that these sub-payments will either all fail or all succeed atomically. It follows that in this payment, B and C are intermediaries, but not trusted intermediaries.

In Lightning-speak, B and C are called “routing” nodes, and this is what we do at ACINQ: we don't send or receive payments, we always forward them.

4. Lightning on an HSM

4.1 Securing keys

How hard can it be to secure a Lightning node? Surely it is just a matter of protecting a few private keys, right? Well, not quite. But let's assume for a minute that it would be enough.

When it comes to protecting cryptographic keys, hardware is king. Hardware Security Modules (or HSM) exist for that purpose. A HSM can be thought of as a big smart card that you plug into your server. Thankfully, cryptographic methods used by the Lightning protocol are fairly standard and can be found on off-the-shelf HSMs.

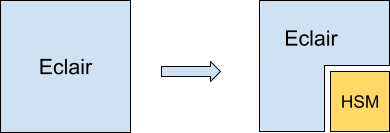

Here is what a naive setup would look like:

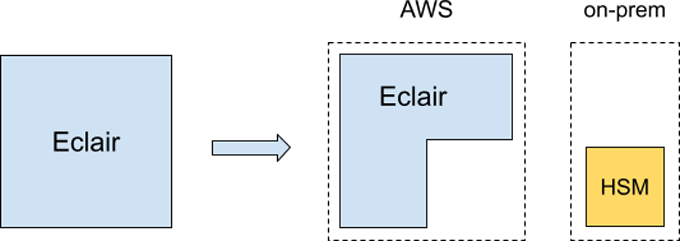

That seems straightforward, but there is a first catch: our node runs on AWS and we can't just plug a physical card into their servers. We need to self-host, but on the other hand, we don't want to lose the flexibility that a cloud provider like AWS offers (the Eclair box is itself a distributed application). This leads us to splitting our deployment:

Now, we cannot simply have the HSM blindly sign whatever Eclair sends to it. Remember that, as a starting point, our servers are assumed to be compromised. There has to be some context attached somehow, and we need to go a bit deeper.

4.2 Securing payments

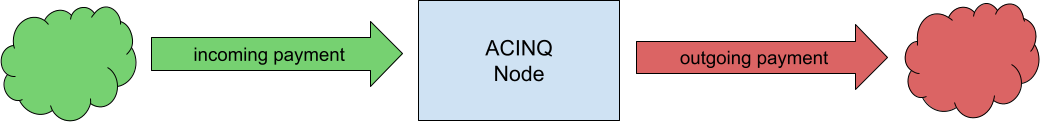

Let's zoom in on what it means exactly to route payments on Lightning. As shown below, for each individual payment, there is an incoming part and an outgoing part.

The problem to solve seems rather straightforward: we just need to make sure that for every outgoing payment, there is a matching incoming payment.

In order to make that assessment, the HSM needs to be aware of a few more things, and, most importantly, it must understand what a Lightning payment is. In other words, we have to implement a subset of the Lightning protocol on the HSM.

Even after having already implemented Lightning twice (in Scala and Kotlin for another project) and with previous experience with smart cards, developing an HSM application is difficult. It is an embedded device with a specific proprietary OS, runtime, system, and cryptographic APIs, which needs to be programmed in low-level C.

Moreover, HSMs are extremely constrained in terms of memory. They are designed to sign batches of documents, internal state updates being mostly limited to incrementing counters, and there is basically no local storage available.

This turned out to be the hardest part, because Lightning is a stateful protocol: transactions include several state transitions that require peers to sign, store and exchange data which must be verified at every step. Incoming and outgoing payments also needed to be linked in order to make the actual verification.

We had no choice but to store all this data encrypted on the HSM's host filesystem, and pass it back and forth, with each sub-step of each payment. At this point, this is what our deployment would look like:

Not only did we keep implementing more and more of the Lightning protocol on the HSM, we also ended up splitting our application in three parts. This is starting to look like a nightmare from an operational point of view.

But at least, we are finally done, right? Well, we did make sure that payments happening within valid channels are okay, but the HSM is not able to tell on its own which channels are valid. For that, it needs access to the blockchain, and we have to dig deeper.

4.3 Securing channels

Lightning payments are only safe because they happen in channels that are anchored in the Bitcoin blockchain.

An attacker who successfully gained access to our HSM host could feed the HSM with bogus payments, routed from non-existent channels to real channels. Our balance check between incoming amount and outgoing amount would pass, but the incoming part would be fake and our wallet would be effectively drained.

This is why the HSM needs to have knowledge of the blockchain, which is the ultimate source of truth.

At first, we thought that we could authenticate channels rather simply, by presenting the HSM with a funding transaction, and a Merkle tree linking that transaction to a block with a valid proof of work. Of course, it turned out to be more complicated: there are various corner cases, like previously valid channels that then got closed. The HSM needs to not miss those too, otherwise it can easily be fooled.

Eventually, we found a way to work with Bitcoin data without implementing a full node in an HSM. However, securely verifying Lightning payments and channels is clearly much more work than what we anticipated when we started.

4.4 Conclusion

We designed, implemented and tested a complete application for an actual HSM, that implements most of the Lightning Protocol.

We were very satisfied with the level of security achieved. But it came at great costs: major added complexity, and operational burden.

First, the software that we had to write for the HSM was much larger than we expected. What started as just a bit more than a key manager for Eclair ended up being almost a full Lightning implementation. High development and maintenance costs were guaranteed, which is problematic given that the Lightning protocol is still under heavy development. Moreover, we had a strong vendor lock in due to the APIs of HSMs being proprietary.

Second, the physical deployment was split into two completely disjoint parts, and critical application state split into three logical parts (on Eclair, on the HSM host filesystem, and in the HSM itself). Basic restart/upgrade operations would become very difficult and risky, because all those parts need to be kept synchronized.

Last but not least, I/O and CPU performance of HSMs is appalling compared to high-end servers. Accessing and storing channel states is slow, signing transactions is slow. HSMs available today would not be able to process more than a few dozen payments per second, not per-channel but for all channels.

5. Lightning on AWS Nitro Enclaves

One of the most important things we learned while working with an HSM is that splitting our application into a trusted and untrusted part is very difficult. Even if we found a better secure runtime and got rid of the performance and persistence issues caused by the lack of local storage available on the HSM itself, we would still end up with a complex design: to perform the necessary checks, the signing module needs to be able to consistently replicate the state of the node it is connected to, which is very hard to implement (technically but also from an operational point of view), and access blockchain data.

The problem here is that most trusted runtimes, including T.E.Es, were originally designed to protect keys and not much more. They assume that such a split between trusted/untrusted application modules is possible, because most traditional applications work with “trusted” data sources: they simply need to verify that requests are issued by one of these trusted sources (which typically means that requests need to be signed with a certificate that the application was configured to trust).

In the Bitcoin and Lightning worlds there is no concept of “trusted source”, but it is rather the opposite: data is assumed to come from untrusted sources. Protocols define policy checks based on cryptographic proofs that applications must verify, this is fairly complex and requires access to the underlying Bitcoin blockchain. What this means is that the “trusted” application that we need to run in a secure environment is more complex than what most trusted runtimes were designed for.

5.1 The rise of Confidential Computing

We are not the only ones trying to use secure runtimes for complex applications: there is a recent trend to implement Confidential Computing Environments that enable users to run entire applications inside secure runtimes, without having to split them between a trusted and untrusted part.

This looks like the best approach: no more consistency issues, limited custom development, and simpler operations.

AWS Nitro Enclaves (which AWS refers to as Isolated Compute Environment) can be used as such a Confidential Computing Environment and has everything we were looking for: a trusted environment that is almost transparent to use from a deployment point of view, and that provides the same performance as a standard high-end server, and includes Cryptographic Attestation that can be used to build trust relationships and manage the deployment of application secrets. It is fairly well documented, has been used by AWS for years and we already run our nodes on AWS: it was an obvious choice for evaluation.

One of our base assumptions is that we don't want to trust the operators of the cloud provider we are relying on. This is an explicit goal of Confidential Computing Environments, and mentioned in the initial requirements for AWS Nitro System:

By design the Nitro System has no operator access. There is no mechanism for any system or person to log in to EC2 Nitro hosts, access the memory of EC2 instances, or access any customer data stored on local encrypted instance storage or remote encrypted EBS volumes. If any AWS operator, including those with the highest privileges, needs to do maintenance work on an EC2 server, they can only use a limited set of authenticated, authorized, logged, and audited administrative APIs. None of these APIs provide an operator the ability to access customer data on the EC2 server. Because these are designed and tested technical restrictions built into the Nitro System itself, no AWS operator can bypass these controls and protections.

We still need to trust the AWS Nitro System, and the team of AWS engineers and security researchers that designed and implemented it. This is expected: no matter what solution we choose, there will always be an element of trust involved (like trusting your HSM vendor for example). Besides, using servers provided and maintained by AWS also protects us from certain types of attacks, such as the ones against SGX described in sgx.fail, which require that attackers control the execution environment.

5.2 Running Eclair inside a Nitro Enclave

Nitro Enclaves can run basically any application and do not require users to program against a specific set of libraries, as long as you can meet the following requirements:

- limited I/O: nitro apps running within an enclave can only be accessed from the host, through a VSOCK port.

- no local persistence: data stored or modified within the enclave will be lost when the enclave stops, as if you were using a ramdisk for example.

- Nitro Enclave images are not encrypted: you cannot store secrets in them (otherwise anyone who can access the server on which they’re running would see your secrets).

So, to run Eclair in a Nitro enclave:

- we need to make sure that all I/O, including database I/O, go through TCP sockets (we cannot use the local file system to store data for example)

- we need to find a way to “inject” secrets into the application.

Managing network connections: the LINK protocol

Making Eclair run all of its I/O through TCP sockets is straightforward: the only file I/O are application logs, and there are several options for streaming them out over TCP.

To manage network connections, we developed a “LINK” protocol, based on custom network proxies that run within the enclave and on the host. When a connection from the enclave to an external destination needs to be established, the destination’s IP address is added to the first TCP packet. The proxy that runs outside the enclave, in the parent EC2 instance, reads this IP address and connects to the actual destination.

Note that this is not secure in itself, and the application inside the enclave needs to make sure that it is actually connected to the correct services (through HTTPS, TLS, or the Noise protocol that we use in Lightning). If that is not possible, then the application needs another way to verify the authenticity of the data that it receives (for example, the Bitcoin protocol is designed with this in mind). And if that is also not possible, then the consequences of someone impersonating the target service need to be evaluated.

With that, we have an application that runs in a Nitro Enclave, and that can connect to the outside world (including the internet).

Securing application private keys and secrets

One of the limitations of Nitro Enclaves is that application images are not encrypted. We cannot embed secrets in them, instead we need to make them start in an “uninitialized” state and have them retrieve secrets (private keys, passwords, …) from a trusted source, every time they start. However, even if images are not encrypted, they are signed and cannot be tampered with, so we can embed public keys that we trust and use these public keys to validate data or set up secure communication channels.

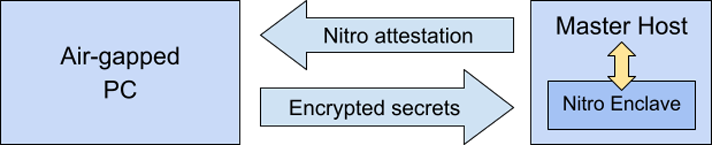

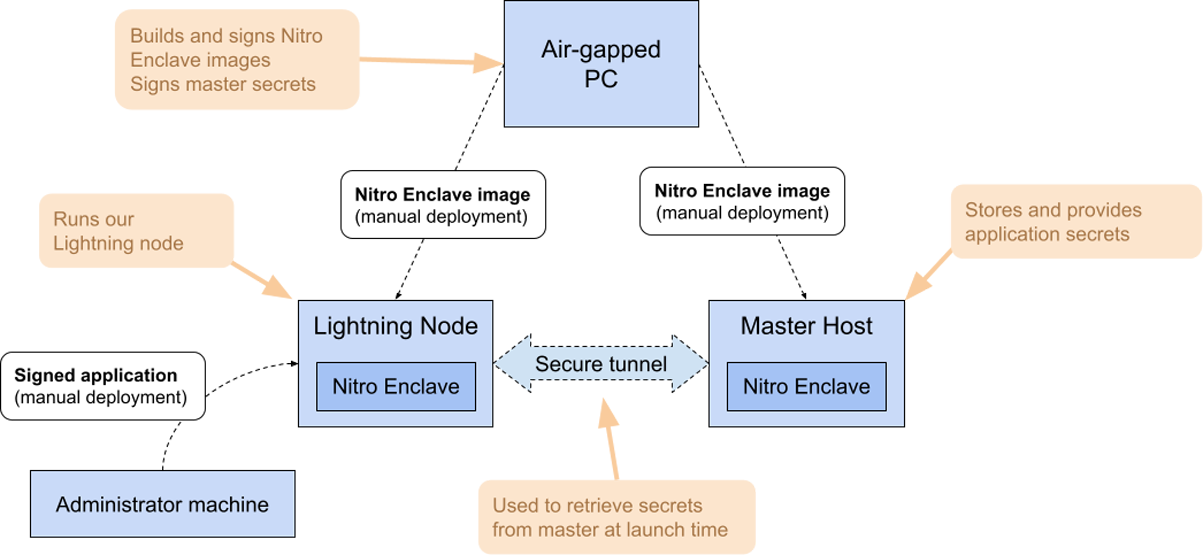

We wanted to have to trust AWS as little as possible and decided to not use AWS KMS, which also requires our administrators to set up specific policies. Instead we used Nitro Enclaves again to build a secure “master” repository for our secrets. We leverage Nitro Attestations to establish secure “tunnels" between our application and the master repository.

To safely inject secrets into our master repository, we retrieve its Nitro Enclave’s attestation and use a secure, air-gapped machine to package secrets into an encrypted blob. We then upload that blob to the master’s host, where it is transferred to the master enclave and decrypted. This has to be done every time application secrets are modified or the master host is restarted.

When our Lightning node starts, it creates a secure tunnel to the master enclave through our network proxies and retrieves its secrets.

Securing Nitro Enclave image deployment and upgrade

We use the same air-gapped machine to build and sign all Nitro Enclave images, which include hard-coded public keys and attestations they can trust so they can establish secure channels between them.

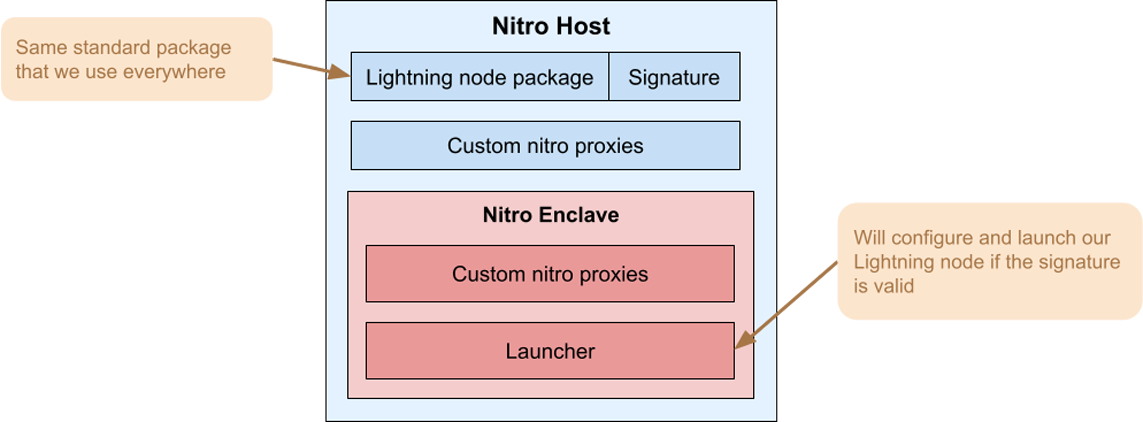

The image that we deploy inside the Nitro Enclave is not Eclair itself but a simple "launcher" application. This is a key part of our solution, because it decouples the lifecycle of the Nitro Enclave image (which changes rarely) from the lifecycle of Eclair (which is updated frequently).

Critically, deploying and upgrading Eclair on our node does not require physical access to our air-gapped machine and remains very similar to what we did pre-Nitro. The Eclair application package is also exactly the same zip file as before.

To run Eclair inside a Nitro Enclave, the process is as follows:

- sign the Eclair package (with a Ledger device, see next section)

- upload the signed package to the Nitro Enclave host

- execute a script that starts the launcher inside a Nitro Enclave and pass it the signed package.

The launcher will verify that the signature is valid, set up secure channels to the master node and retrieve application secrets, create network proxies, create a configuration file, and start Eclair.

5.3 Complementing Nitro Enclaves with Ledger devices

Nitro Enclaves provide us with a secure runtime, but there are still many sensitive operations that require human interaction, such as: signing a new application package, starting and stopping our node, or most management API calls. Using air-gapped machines and Nitro Enclave attestations would not be realistic here from an operational point of view.

This is where Ledger devices shine: their trusted display is the perfect complement to Nitro Enclaves. They can be used to sign these sensitive operations, with a UX that is close to how these devices are used with Bitcoin wallets: instead of validating bitcoin addresses and amounts on their device’s trusted display, administrators validate package hashes or API commands.

Note that using Ledger devices without Nitro Enclaves would not make sense: attackers who gained access to production servers would simply modify the application to bypass any external checks.

Signing and deploying Eclair

We developed a custom Ledger application that we run on Ledger devices that the launcher is configured to trust.

We use our Ledger devices to sign applications: we deploy the same standard package that we use on non-Nitro nodes, built with the same tools. Administrators use their Ledger device to sign the package once they’ve checked the package hash on their Ledger screen. To protect ourselves against “supply chain” attacks, several developers build the app on independent machines, and check that they all find the same package hash (the build is deterministic).

Authenticating management API calls

We also rely on Ledger devices to secure sensitive administration tasks, by setting up tunnels directly between the Nitro Enclave’s host and the Ledger device through the administrator’s PC. Those tunnels are used to send the actual API call and parameters to the administrator’s Ledger device where it is displayed. Administrators can then approve the request which is signed by the device, the signature is sent back through the same tunnel and checked inside the Nitro enclave.

Note that this all happens behind the scene. For administrators, command lines parameters all remain the same, they just need to use a different tool (secure-eclair-cli instead of eclair-cli).

Each Ledger device is individually whitelisted, and the launchers that we run inside Nitro Enclaves are configured to trust different devices for application deployment and application management.

5.4 Securing our Bitcoin node

We use a Bitcoin node for 2 different purposes:

- interact with and monitor the Bitcoin blockchain

- manage our on-chain funds: we did not build a custom wallet but instead use Bitcoin Core’s built-in wallet

Bitcoin core doesn't run inside a Nitro Enclave, but it can still benefit from the security that they bring:

First, monitoring the Bitcoin blockchain is done by Eclair inside a Nitro Enclave: we can cryptographically verify that transactions exist, are signed correctly, detect and react when they are spent, and these checks cannot be bypassed by attackers without forging fake bitcoin data, which would be extremely expensive. Unlike with an HSM, custom development is not needed here because these checks are already implemented in Eclair.

To protect ourselves against eclipse attacks, Eclair connects to different bitcoin data sources in order to verify that our main bitcoin node is still in sync with the Bitcoin network.

Last but not least, we can also use Nitro Enclaves to protect our on-chain funds: Bitcoin Core can be configured to delegate private key management to an external signer through its Hardware Wallet Interface (HWI). Wallet operations (block and transaction matching, balance tracking, coin selection) are done by Bitcoin Core but private keys are managed by Eclair and never leave the Nitro Enclave. When needed, Bitcoin Core will ask Eclair to sign transactions, and Eclair can check that they are valid funding transactions that it created itself.

5.5 Operational impact

Changing a basic non-security-sensitive configuration parameter is the same as pre-Nitro: update the appropriate configuration file (which we store on github and does not contain any sensitive information), stop and restart Eclair (which requires a trusted Ledger device), exactly as before. There is no need to access our air-gapped signing machine.

Deploying a new version of Eclair is almost the same as pre-Nitro, with an additional signing step: build a new application package, and sign it with a trusted Ledger device, which just takes a few seconds. There is no need to access the air-gapped signing machine.

Modifying the launchers that run inside Nitro Enclaves, or the configuration secrets used by our secure repository, is more complex. New images have to be built and signed, secrets need to be encrypted, the master image needs to be restarted, configured with its encrypted secrets, and then the launcher image needs to be restarted too. This requires physical access to our air-gapped signing machine but is a very uncommon procedure.

From a provisioning and performance perspective, nothing has changed: our application runs on the same type of servers and with the same performance as before.

Maintainability is also not affected: we built tools to configure and run our application inside Nitro enclaves, as well as a custom Ledger application to easily and securely sign and deploy new packages. But the Lightning node that is actually running is the same as before, with the same build process, with just an additional signing step. ACINQ developers are not impacted by Nitro at all, Eclair and our Nitro toolkits are completely independent projects.

6. Conclusion

Nitro Enclaves enabled us to design two different layers of security tools and processes:

- To run our Lightning node in a Nitro Enclave, we built a set of tools and containers that allow us to use our application without any modifications. Creating and updating these containers is a very sensitive but also very uncommon operation.

- To secure the maintenance and administration of our Lightning node, we built a custom Ledger application that requires human interaction (deploying and upgrading our Lightning node software, as well as sensitive operations such as opening or closing new Lightning channels).

From a security point of view, our requirements are met: Eclair runs inside an enclave that cannot be accessed or modified and its deployment and management is protected by hardware devices that include a secure element. Even though we still believe that solutions based on HSM that we host ourselves would probably be even more secure, the associated operational and maintenance costs would simply not be realistic (assuming we solve the very serious performance issues we had with current HSMs in the first place).

Being able to run Eclair without any custom changes also means that we are not locked in with AWS (as opposed to a solution based on a custom HSM firmware that would be extremely difficult to port to a different device) and we could move to another production environment easily. Since most cloud providers are deploying Confidential Computing Environments, what was done here with Nitro Enclaves should be replicable on other platforms.

Anyone planning to deploy a high capacity Lightning node must not only consider how to securely protect their working capital, but also the ease of operational tasks like node management and upgrades. We believe that the solution that we built with AWS Nitro Enclaves and Ledger hardware wallets is the best fit we can have between security, cost and maintainability.